Experiment Length:

Estimated Time: 7 hours Actual Time: 7 hours

Summary:

This was a fun experiment in seeing how we might convert customer behavior analytics to UI interface improvements. Traditionally, this process involves a manual, somewhat tedious review of heatmaps, followed by product managers implementing UI changes. However, in this experiment, we aimed to automate this process by using GPT-Vision to analyze heatmaps and suggest UI code improvements directly.

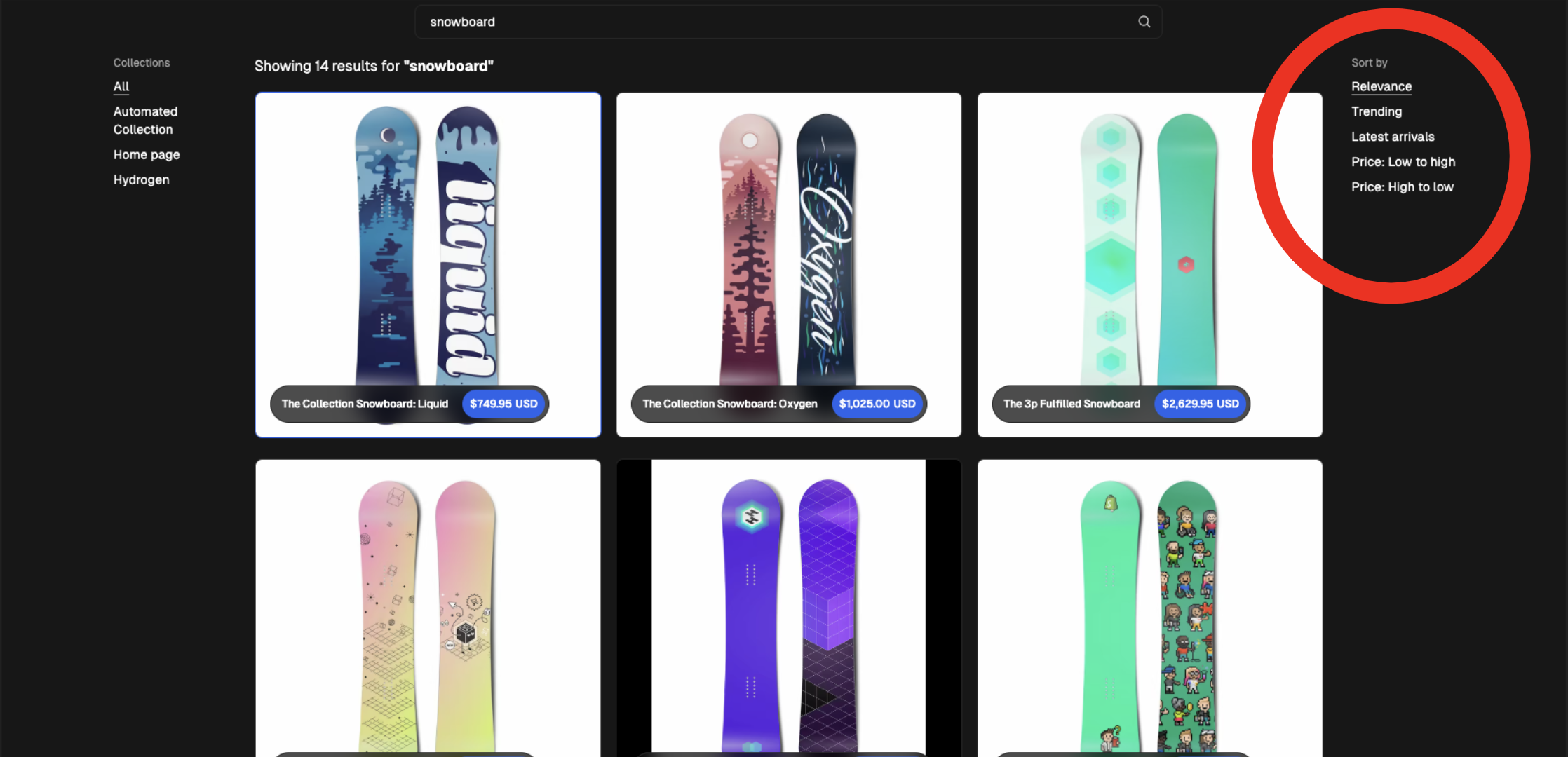

We used a click heatmap, which shows where users click on your site:

The essence of this approach lies in its potential to streamline the UI development process, making it quicker and more responsive to user interactions. The experiment involved technologies like GPT-Vision, Tailwind, Next.js, React, and Heatmap.js. The goal was to create a system that could not only analyze user interaction data but also translate these insights into practical UI code enhancements. This methodology could revolutionize the way we conduct A/B testing and UI design, making it a much more dynamic and data-driven process.

Plan:

The process was designed to be straightforward yet innovative:

-

Heatmap Analysis: Utilizing GPT-Vision to analyze heatmaps generated from user interactions on a UI.

-

Suggestions for UI Improvements: Based on this analysis, GPT-Vision would suggest specific UI changes.

- Prompt: ‘Analyse user heatmap and suggest one simple change in the UI layout of the website elements. Be very specific so that we can implement these suggestions directly in the code. For example: Make the “buy” button 2 times larger, or Move button “buy” to the top’

-

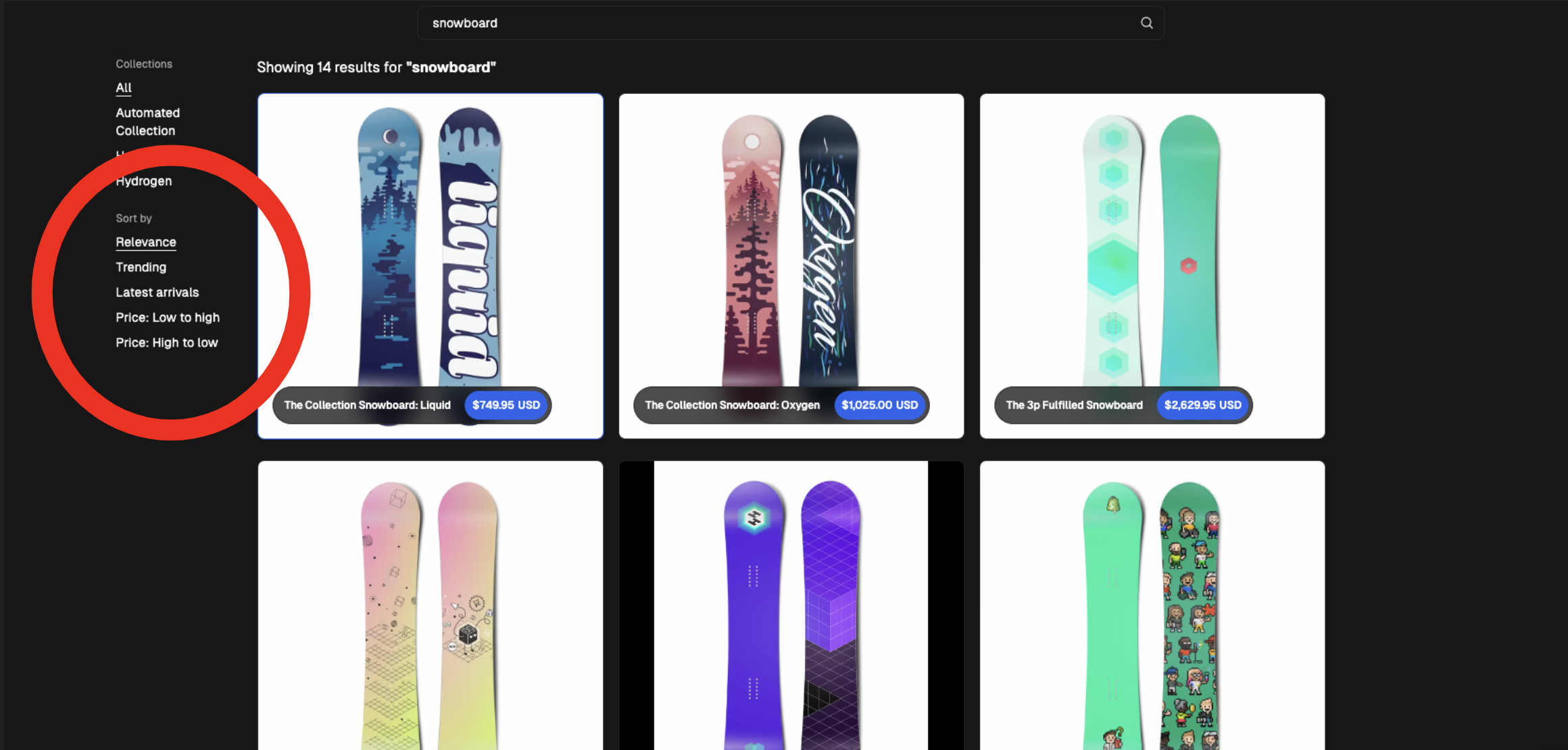

Converting Suggestions to Technical Requirements: These suggestions would then be converted into technical requirements.

- Prompt: ‘Rewrite the above suggested changes as technical requirements to make them very clear to a technical person, in order to be implemented on the website’

-

Code Implementation: Using tools like Tailwind, Next.js, and React, these requirements would be translated into actual UI code.

- Prompt: ‘Change the code following instructions’

This approach promised to automate a significant portion of the UI development process, turning heatmap analytics directly into code changes without the need for manual interpretation.

Result: 🟢 Pass

The experiment can be deemed a success. The recommendations provided by GPT-Vision based on the heatmaps were generally accurate and sensible. In several instances, the suggested UI code changes were directly applicable and enhanced the user interface as predicted.

However, it’s important to note that while the logic behind heatmap analysis and recommendation was sound, the actual code generation part of the process still requires refinement. The integration of the suggested code into the existing UI was successful in some cases, but not universally.

Interface Before :

Interface After:

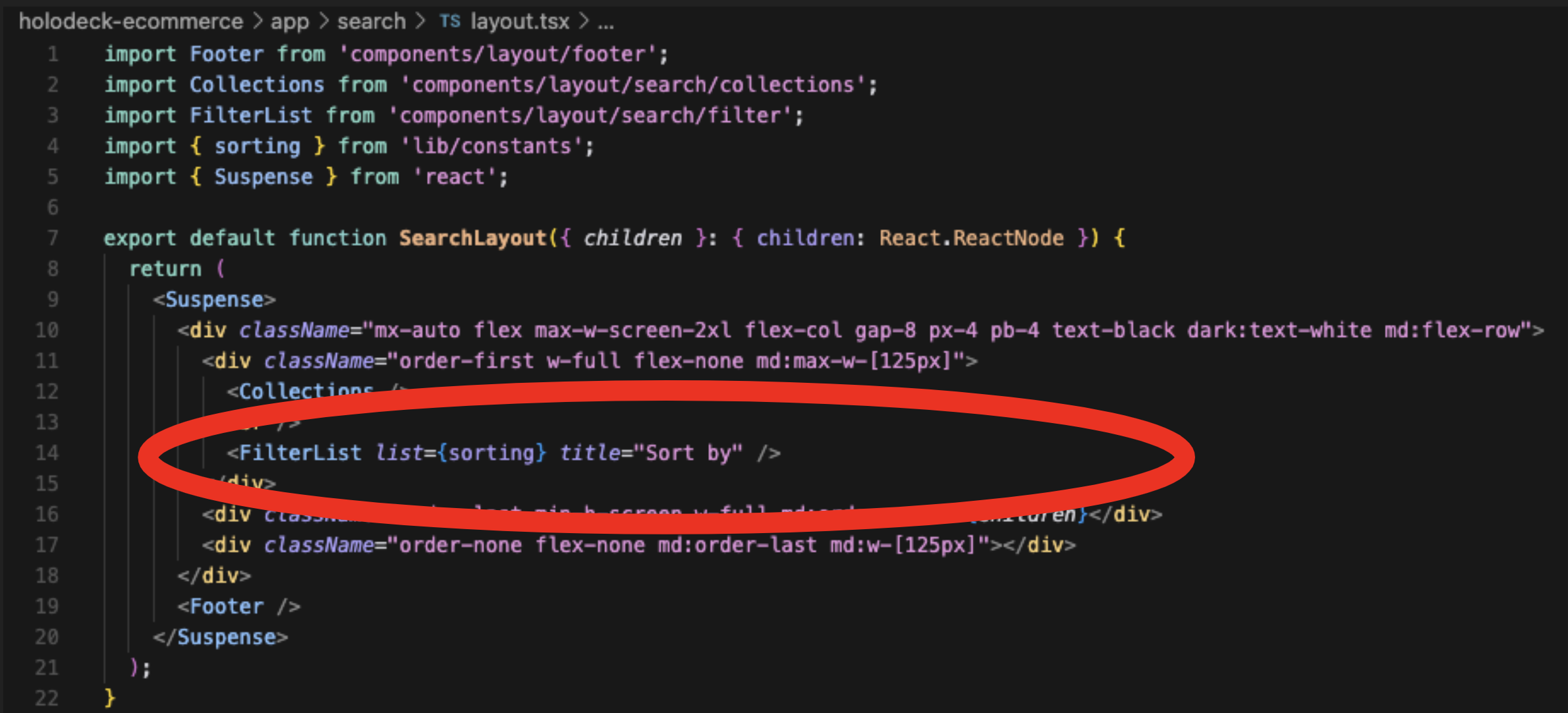

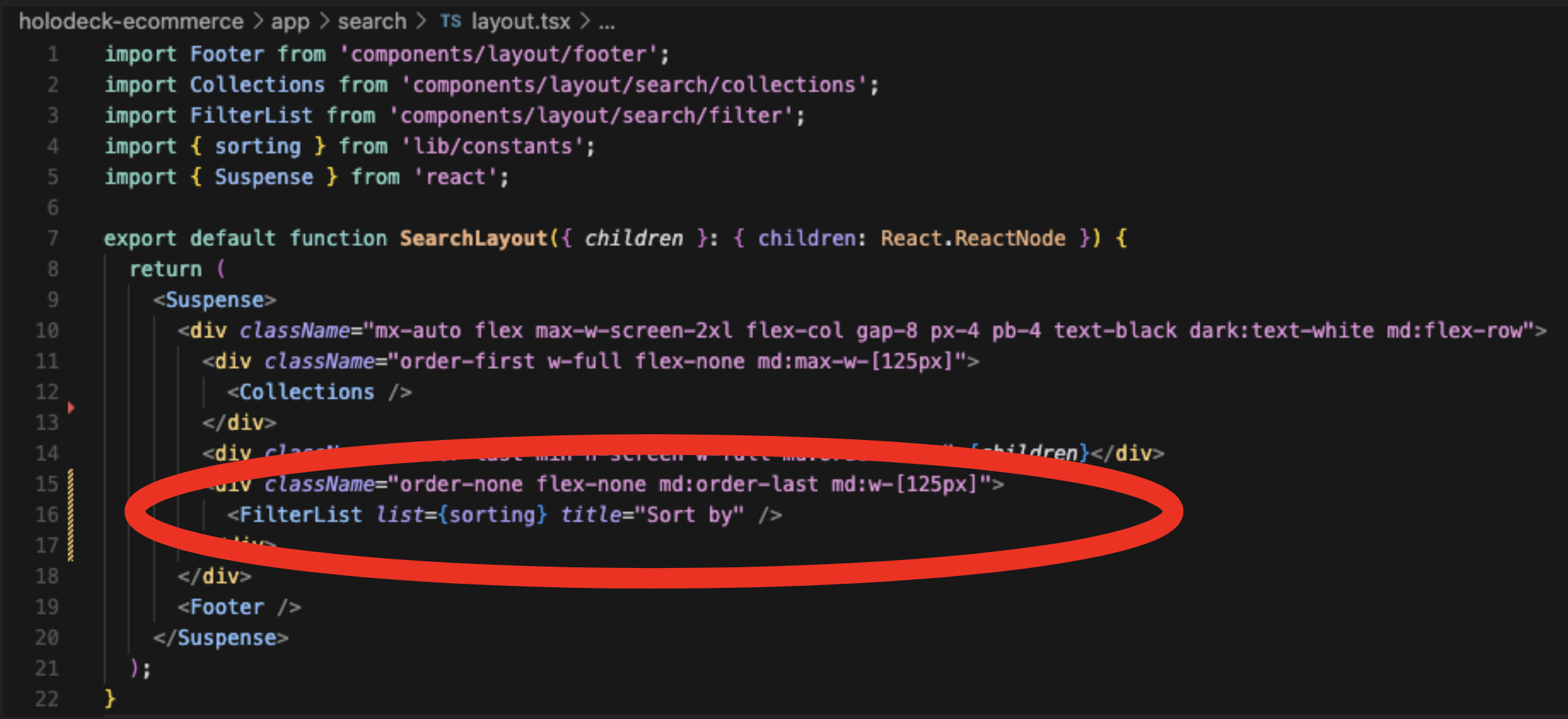

Code Before:

Code After:

What I Learned:

The main takeaway from this experiment is the promising potential of automating UI design based on user analytics. The analysis and recommendation parts of the process functioned effectively and consistently. However, the code generation and integration aspect needs further development.

My personal learning curve was steep but rewarding, especially in terms of utilizing GPT-Vision. Although not a part of the final demonstration, I experimented with Vim-GPT for auto-generating the heatmap was an intriguing side exploration. Vim-GPT’s combination of GPT-Vision with Vimium for browser task automation provided valuable insights into potential future applications.

Overall, the experience was my first with GPT-Vision, and its capabilities in analyzing visual data were impressive!

The experiment was worked on during an AGIHouse hackathon with Devin Liu and Matt Diakonov!

This blog post was generated by combining my messy notes with ChatGPT ;) The title image was generated using Midjourney with the blog post title as the prompt. ✨