#concept-pamphlet #todo: take from the class what i want

Cheatsheet

Create flashcards for every term below. Be concise and use equations where possible. Keep your answer succinct, accurate, and use math equations where possible.

- word vectors

- back propagation

- neural networks

- encoder

- decoder

- dependency parsing

- neural networks

- recurrent neural networks

- fancy version

- recursive neural networks

- recurrent neural networks

- language models

- n-gram

- vanishing gradients

- seq2seq

- machine translation

- attention

- subword models

- transformers

- BERT

- ELMo

- transfer learning

- pre-training

- knowledge-pretrained language models

- question answering

- natural language generation

- bias in models

- retrieval augmented models + knowledge

- LaMDA

- convolutional neural networks

- tre recrusive neural networks

- constiutency parsing

- scaling laws for language models

- coreference

- editing neural networks

Reference

Skeleton

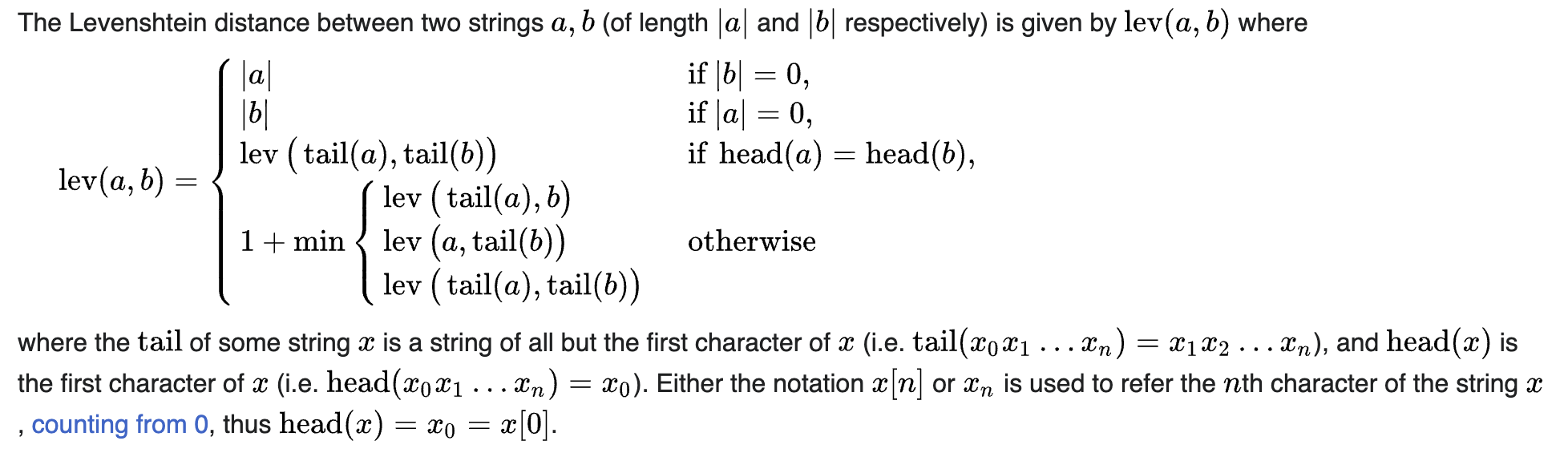

levenshtein distance equation

?

it is the minimum number of single-character edits (insertion, deletion) required to change one word into another. tells you how different 2 strings are. the higher the number, the more different a string.made in 1965

source: https://en.wikipedia.org/wiki/Levenshtein_distance

source: https://en.wikipedia.org/wiki/Levenshtein_distance

other helpful stuff

https://twitter.com/yimatweets/status/1578082015668146177?s=46&t=iKJdkAuqqJUYFrftwrlOHA

- “Can principles lead to purely explainable whitebox deep networks that work the best in all settings: supervised, incremental, unsupervised, classification, generative, and autoencoding, with smaller networks and less data/computation?”

https://web.stanford.edu/class/cs224n/index.html#schedule

Concepts

- Bag of words

- K nearest neighbor

related, ML engineering

- Linear regression

- Logistic regression

- Evaluation metrics

- Docker

- web services

- cloud

- Model deployment

- Tree-based models

- Neural networks

- Kubernetes