#concept

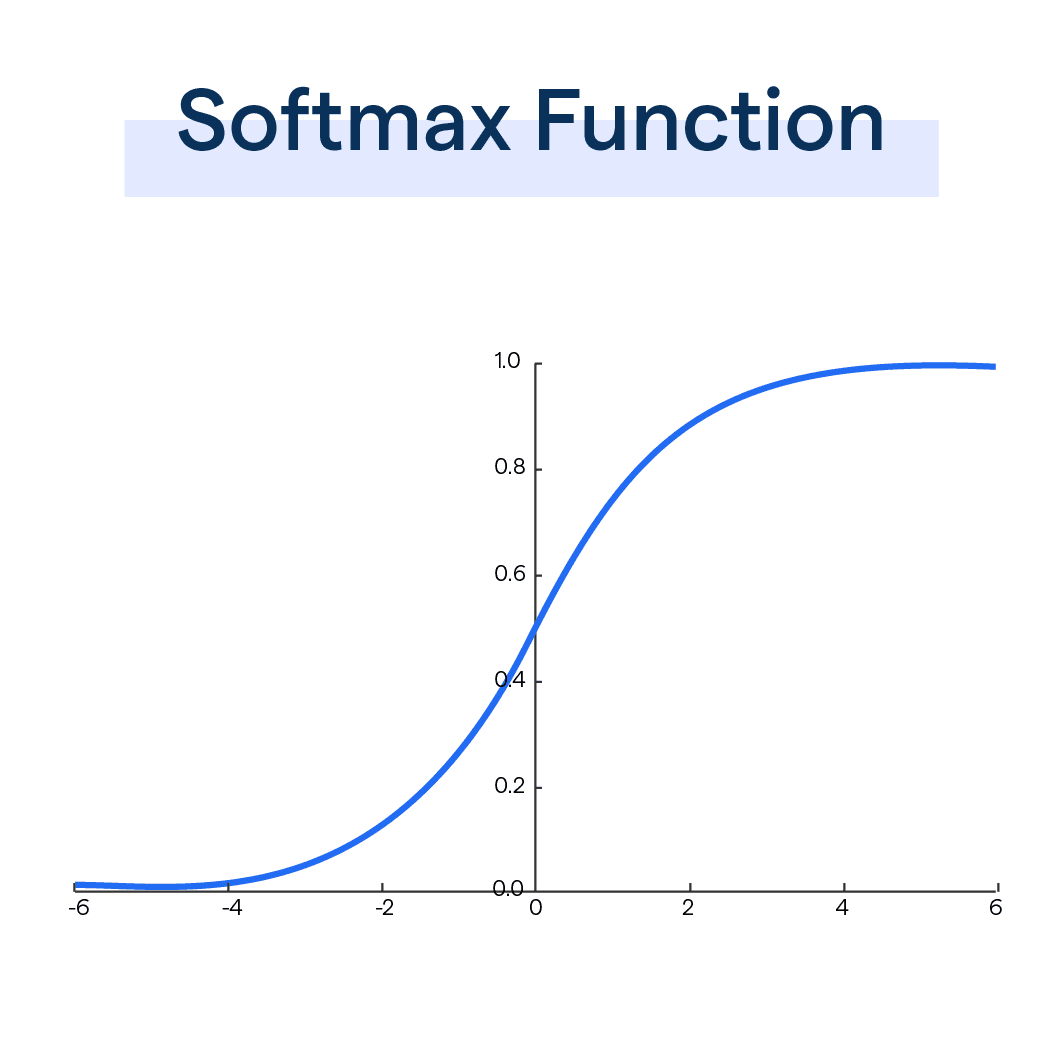

softmax turns a vector of real values (model predictions) into

?

probabilities that sum to 1.

It is used as an activation function

use of softmax in a neural network >> It is often used as the last activation function of a neural network to normalize output of a network to a probability distribution over predicted output classes.

standard (unit) softmax equation where

- sigma is the output. a vector of real numbers that is normalized between 0 and 1

- z is input vector

- K is vector length ?

re:224w, intuitively…softmax is great because you are maximizing the difference in the dot product between vectors. and normalize across all dot products